One of the fundamental challenges facing society today is preservation of economic growth¹ while managing the trifecta of reducing greenhouse gas (GHG) emissions, outputting less pollution (especially in urban areas), and reducing the energy requirement of transport logistics (which is interrelated to both the aforementioned issues as well as economically²).

Why do we need to solve these interrelated but distinct and separate problems?

GHG emissions (carbon dioxide and CO2 equivalent emissions) are, essentially, causative to climate change, which is an existential threat, not necessarily to humanity in general (presumably some people will survive catastrophic climate change) but certainly to our way of life, standard of living, and the ability as a society to progress technologically. A point that is not mentioned nearly enough is that pretty much all easily accessible raw materials such as coal, iron, oil, etc, have been extracted from the earth’s crust, and therefore if we lose access to the technology stack humanity has developed now because of a widescale civilisation destabilisation, it will be almost impossible for descendants or successors to develop the same tech stack — complex societies on this planet will not be able to emerge for a very, very long time. This is a serious problem!

Pollution, as distinct from emissions, tends to be a more localised issue which refers to a wide variety of generally local outputs. There are a few different types of pollution, but in general, we are referring to the issues of poor air quality through localised outputs including metallic and plastic particulate matter, carbon black, and gases such as NOx, and NO₂.

Particulate matter — PM — is a particularly pernicious issue with links to respiratory and cardiovascular disease, but is also being increasingly correlated with Parkinsons, Alzheimers, dementia, and other ailments. Human physiology has not really evolved to deal with extremely fine particulates (particularly those of 2.5 micron diameter or smaller, referred to as PM2.5) and these very fine particulates, once inhaled, are tiny enough to pass through the alveoli of our lungs into our bloodstream where they are small enough to transfer through the blood-brain barrier where they lodge in the delicate neural structures of the brain.

PMs can be emitted as a byproduct of internal combustion, but also through vehicular travel and braking, specifically emissions of tyre wear and brake dust.

Pollution also refers to localised gaseous outputs of gases such as NOx or NO₂ and others which create smog and are strongly linked to respiratory disease and increased mortality — up to 6.5 million deaths per year are attributable to poor air quality!

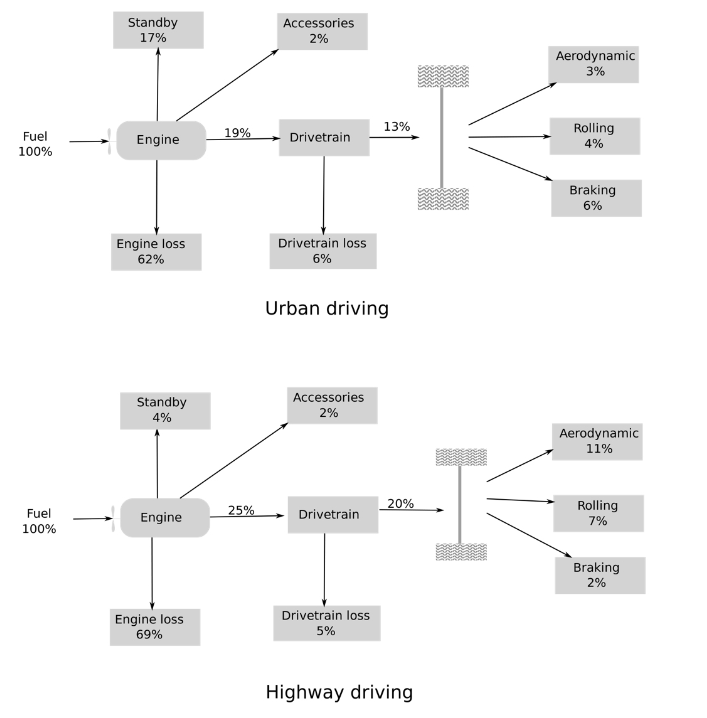

Reduction of the required energy to move cubes may seem unrelated to the two preceding issues. But, with generally increased and more intensive transport activity associated with increased penetration of particularly e-commerce, and also an increased level of convenience when it comes to availability of produce and materials, next-day or even same-day delivery is not going away³. Therefore what is necessary to continue to enable this growth is a reduction of energy requirement per unit of goods moved⁴ over the longer term to:

- Diminish the output of GHG and pollution associated with transport logistics, and,

- To reduce costs of transport logistics (which is good for economic growth).

The simplest way to reduce the energy requirement — which is often dependent on external, and sometimes unpredictable, factors — is to merely use less energy. This seems like a tautology, but it is amazing how many Rube-Goldbergian type solutions try to claim some kind of reduction of energy use through a very complex system⁵. At the end of the day, physics will always win. Efficiency gains can be realised, however, through the development of straightforward solutions to maximise efficiency through application of sophisticated, advanced controls.

Where we do these things matters as well, since we want to get the most bang for our buck, so to speak.

GHG emissions are as previously mentioned, a global phenomenon — GHG emitted in California will, broadly speaking, disproportionately affect those in the so-called “Global South”. Reducing GHG emissions through the reduction of energy requirement therefore has a net positive no matter where it is deployed; and so, it makes sense to deploy what are generally more expensive GHG emissions reducing technologies where per capita GHG emissions are the most intensive, which tends to be locales with stronger economies.

Pollution is an altogether more local problem. As it turns out, built-up and urban areas are by far and away the most sensitive to pollution, but — ironically and unfortunately — these urban conurbations are the most prone to pollution due to the duty cycle requirements of those geographies (lots of start/stop, frequent tight cornering, lower average speeds tend to prevent the dispersal of pollution, buildings channel smog and particulate matter…).

This has a drastic impact on those living and working in these locales, who often tend to be poorer with fewer economic and social mobility prospects; and also tends to, again, have a disproportionate impact on the most vulnerable⁶ in the population — another social injustice brought about by the ready energy utility of liquid hydrocarbons and displaced externalities.

Diesel is worse here than gasoline, which is worse than electric-only operations. Diesel fuel has a relatively incomplete combustion which emits soot (carbon black); NOx, NO₂, and others which create smog; but also metallic PM2.5 which as previously described the human physiology is ill-adapted to filtering out. Gasoline is a bit better in general, with something of a cleaner combustion, but still outputs significant levels of pollution. In urban areas, therefore, a zero-tailpipe emissions solution is preferable.

So: technologies should be deployed where they will have the most meaningful impact. Technology for the sake of technology is, generally, unhelpful, and the concept of low- or zero-emission long haul trucks is all well and good when it comes to reducing GHG emissions, but since the pollution is dispersed along relatively long distances on these long highway legs, there is less of an issue outside of urban areas⁷. These long distance legs often start and end in urban or urbanised areas, however, and the urban/semi-urban delivery segment is the most challenging and difficult to solve for duty cycle; but solving this difficult problem will have the most significant impact on improvement in air quality, directly saving lives both in the short term but also long term.

What is required then, is the best option that will enable massive reductions of GHG and PM today, and will apply those reductions in the short term, and where it matters most. Because of the rapidity of anthropogenic warming, solutions need to be deployed urgently, and therefore cannot wait for to-be-developed-and-paid-for large-scale electrical generation, distribution, and charging infrastructure⁸; the problem cannot continue to be kicked down the line.

Operators also need meaningful pull factors to encourage adoption of effective solutions; push factors such as governmental legislation can be highly effective, but often these are equally highly politicised and so cannot be relied on to deliver the meaningful results that are necessary. So solutions must be economically viable, environmentally meaningful, able to slot into existing operations, otherwise there will never be takeup on the scales and timescales needed to make a difference. While many large organisations are paying for semi-effective solutions out of ESG budgets these are not long-term viable or largescale, and the problems are such that there is widescale adoption today.

We need to provide the best solution that is able to be deployed at scale today, which will provide the most impactful outcomes in the geographies that are most vulnerable, and that will encourage zero-emissions takeup and give all logistics providers the ability to deploy ZE at scale in short order.

We at Bristol Superlight have developed such a solution, and proven its operation over the last few years with some of the most demanding customers, in some of the most demanding applications. Our vehicle solution is lightweight, sophisticated, utilising highly advanced controls, and able to deliver meaningful zero-emissions operations in urban areas, while slotting into logistics carriers day-to-day operations seamlessly. It is a solution which is real, and which works, and has covered thousands of kilometres on the road in revenue service; which is economically viable both from a capital perspective but also from an operational cost consideration, with significant reductions in cost per pallet-km when compared to not only conventional diesel trucks, but also to other electric trucks. We have chosen not to electrify a truck, but to redefine it. And we have the evidence to back it up. It is the culmination of years of work and effort — and this is just the start.

¹ Economic growth for the sake of economic growth is of questionable merit. However, some of the world has reached a certain quality of life that they would prefer not to see diminished; and the rest of the world would like to reach at least that same level of quality of life. So, economic growth. Whether this path is advisable or healthy is questionable to say the least, and is a whole other problem; nevertheless, we all exist in the real world, and human behaviour is, as the evidence indicates, unlikely to drastically change. ^

² It is a truism that, generally, economic growth (and more specifically, prosperity), is linked to reduction of transport costs. By enabling the increasingly cheap movement of people and goods, more people have been lifted out of poverty than ever before. The paradox, and corollary, statement is that the increasingly cheap movement of people and goods has resulted in greater externalities in terms of GHG emissions (and the resultant climate change) and in terms of pollution (primarily, but not exclusively, around air quality) which disproportionately impact geographies with a lower level of economic development. ^

³ To the contrary, this is in some ways an (economic) more efficient allocation of labour — as Jeff Wilke, former CEO of Worldwide Consumer at Amazon.com says, “delivery in some sense replaces your labor, which was unpaid, to get in your car and drive somewhere to buy something and come back.” ^

⁴ The humble pallet comes to the fore here as a near-universal footprint for easy mechanical transfer of goods; it is as ubiquitous and as game-changing as its larger cousin, the 20-foot equivalent unit (TEU) shipping container. The pallet is sometimes referred to as a cube, referring to the pallet + payload volume on the pallet. ^

⁵ Complexity is not the same as sophistication. ^

⁶ Consider the tragic case of the child Ella Kissi-Debrah, who has the unfortunate distinction of the first death directly attributed to poor air quality. ^

⁷ Though, it has to be noted that workers in warehouses servicing these diesel trucks are suing their employers because of their direct, prolonged exposure to pollution caused by diesel vehicles. ^

⁸ Not just charging infrastructure — the International Energy Agency has determined that the transition to electrified vehicles requires roughly 50 more lithium mines, 60 more nickel mines, and 17 more cobalt mines just to meet the 2030 EV projections. Going full on long-range BEV for every single commercial vehicle is just not feasible in the near to medium term. ^